Data Protection, Data Classification and Tiering

One of the best things about my job is that I get to talk to customers every day about the challenges they’re facing. I get to act as a pollinator, collecting ideas and perspectives, adding my own into the mix, then sharing them and helping those customers determine what solutions are available and which might be a good fit for their environment.

Over the last year, I’ve been seeing the conversations turn more and more to addressing data protection. Customers are facing tighter service level objectives, with much more data, and the industry offers more options, so traditional scheduled backups are no longer adequate to address every workload in the environment. Here’s what I think is the best approach to getting a data protection under control. This is a simplified version, we can get a lot more sophisticated to address specific situations.

Business requirements and data classification

The first thing in this case is the hardest, and progress usually stalls here. Business requirements are crucial to having a *good* data protection strategy. Without business requirements, an IT organization will have to “give it their best shot”, and that may or may not be in line with what upper management, or the business unit, or the application owner expects. Another way of looking at it that we need the business requirements to build a good design, which we need to have a successful implementation, which we need before we migrate or transition from the existing system, which we will then operate as our live environment. At Varrow, and now as Sirius, we designed our services around this concept, summarized as “Assess > Architect > Implement > Transition > Operate”. The output of each step feeds directly into the next, like a flow chart. As technical people, we like to start in the Architect (or Design) phase, sometimes in the Implement phase and figure it out as we go. The downside of that is we (the IT organization) end up making up our own business requirements, using assumptions that may not be in line with reality. Based on the conversation we have on a weekly basis, those assumptions very likely *don’t* line up with reality and no one finds out until things go sideways.

So back to the point: a lot of customers don’t know how to get the business requirements, or they don’t have time to do that while simultaneously running their environment. In these cases, I recommend a data classification assessment and data protection assessment. This is not just a tool that crawls your environment and spits out a report showing where your data lives. Instead, it should be interview driven so that the business units or application owners can give their perception of what service level they think they’re already getting, as well as what service level they believe their data requires. The results of this kind of assessment are usually eye-opening for an organization, and can really help IT justify the requests they make. If your IT organization already has the business requirements (not just “I’ve worked here for years and know what they expect”, but real requirements from the business units for each of the many types of data that exist in the environment), then it’s ready for designing a solid data protection strategy.

Designing for data protection

Here’s the part that customers usually jump to first, but that requires the groundwork in the section above: determining which technologies make the most sense in your environment. Probably, the business requirements show a small amount of data that’s very, very important, and large amounts of data that the company can function without for a reasonably long period of time. So, in most environments, if an IT org used a single method to address all of the data in their environment they would end up either paying too much or failing to meet their most demanding RTO’s / RPO’s.

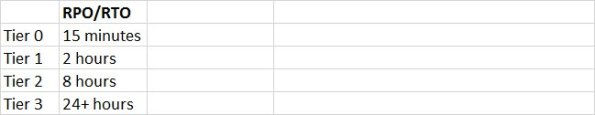

Because of this, the vast majority of customers will want to establish tiers with different service level objectives (RPO, RTO, uptime), and tier-0 for basic infrastructure that everything depends on. Just to reiterate: These are design decisions that should be driven by the business requirements. For example, if for whatever reason your organization only has a single type of data, then maybe a single tier and single approach would be just fine for you. (But, that case is an exception.)

Once we have these tiers defined, then, finally, we can start talking about technologies to meet those SLO’s. Here’s an example of what these tiers might look like. For simplicity, I’ll keep RPO and RTO equal, though there are many situations where they might be different.

Tier 3

Armed with this, we can determine technologies that will address each of the tiers. I think it’s best to start with the Tier 3 data and build upwards. Most organizations already have a backup application, so as long as it’s robust and reliable it should be able to accommodate Tier 3.

Keep in mind that speeds and feeds (read throughput, concurrent threads, etc) get important when designing for a specific RTO. If your backup target can only restore half of your data in 24 hours, then you won’t meet a 24 hour RTO if your only storage array fails. In that scenario, you’ll also need something to restore that data onto, so the rabbit hole can get pretty deep pretty quickly depending on what kinds of failures need to be protected against.

Another point is that recovery in this context is only one aspect of “Backups”. Backups will actually be used most often for operational recovery (“I’ve lost a file or server due to corruption or misuse, please restore it for me”), and also used for retention, compliance, and other things. So what we’re doing is making the most of our backup solution by leveraging it for our recovery plans, and squeezing more value from it.

Tier 2

Moving up to Tier 2 in our example, we would probably want to use array based or hypervisor based snapshots to meet a 4 hour RPO/RTO. Restoring from backup may be possible for a subset of data, but for a larger recovery it’s going to be unlikely that 4 hours is enough time to restore from backup. (If it is, you may have oversized your backup solution). Ideally, these snapshots would be managed in the backup software, and we’re seeing an industry trend toward that.

Tier 1

Tier 1 gets more demanding, and more expensive. We probably don’t want to do snapshots that often, for several reasons. First, it’s demanding on the array or hypervisor. Secondly, t’s probably triggering VSS in the guest OS and doing that in rapid succession can have performance implications. So, to address this we would probably recommend some kind of CDP (Continuous Data Protection) solution. If the price makes sense, this could be the same technology we’d use for Tier 2 as well.

Tier 0

Finally, for Tier 0 apps the requirement delta may seem small but there’s a big difference, and the price usually goes up pretty sharply. Basically, here all we have time for is a guest OS reboot or an application level failover. That means this would have to be an HA event, and not a restore or DR-type failover. We’ll need to remove any SPOF’s all the way down the stack, including storage. For commodity servers, we would probably look at something like VMware MSC.

Application Level Data Protection

Another industry trend that makes a lot of sense is the idea of moving data services up the stack, to the application layer. You can get a lot more intelligence built in by handling it there, at the cost of decentralizing management and reporting. For example, your Exchange admin may be running DAG, so she gets more intelligent failover, performance isn’t impacted by backups, and using local storage instead of a storage array removed the SPOF of a shared volume. In this example, the backup team probably doesn’t have visibility into the status of replication or the separate images, leaving a blind spot when it comes to managing and reporting on the overall health of the enterprise as it pertains to data protection. To address that, all of the major backup products are moving to integrate application level data protection with the overall data protection management platform. That would give you the benefits without losing visibility. This approach still requires the application owner to be responsible for managing the data protection. Some organizations may have historically kept that responsibility with the backup team, but as we get into these narrower SLO’s it becomes more expensive to build this level of protection into the infrastructure.

Some examples

Okay, so what would this look like in real-world terms, instead of nebulous concepts?

I’ll start with commodity servers, like a Windows or Linux VM that runs an application service or daemon that doesn’t have native data protection built in. I’ll use EMC products in the example because I’ve spent most of my time working with EMC products over the last 5 years. There are other storage and replication products that can accomplish similar things.

The recovery automation for Tier 1 could be VMware Site Recovery Manager, a 3rd party product or it could be scripted. For virtual machines, it could also be hypervisor based replication plus SRM or replaced with something like Zerto that does the replication, CDP, and failover automation.

Note that these tier numbers are subjective and you might choose to make this Tier 1 through 4 and reserve Tier 0 for infrastructure only.

All of these will require compute to be already in place for recovery at the time of the outage. If your recovery plan can tolerate 5 days RTO for example, then you might be able to get by with procuring server hardware after the incident occurs. If you’ve priced out these products, you’ll know that the price goes up quickly from Tier 3 to Tier 0. These kinds of decisions, with associated costs, are why business requirements are important.

What if it’s Microsoft Exchange, or SQL, or Oracle?

Because we don’t need infrastructure-level replication and HA, the cost is probably less expensive, and the tradeoff is that operationally the Exchange team has to manage all of their copies and local storage instead of leaving that to the infrastructure team.

The approach can be used for Microsoft SQL with SQL Always-On. That might be a synchronous copy used for the top tier workloads and an asynchronous copy for longer SLO’s and site resiliency. Oracle can do this with Data Guard. In each case, the application will probably handle recovery better, and the cost will probably be lower (but not necessarily) by handling data protection natively. Also in each case, that pushes the responsibility away from the infrastructure team and over to the application team, so the operational aspects have to be planned out and well-understood. There are also reporting tools, like EMC Data Protection Advisor and Rocket Servergraph, that allow management teams to have visibility into the overall data protection health, while handling different aspects with different products that may not integrate directly.

While there are a lot of flashier new technologies out there that we’re all excited about, I’m having these kinds of data protection conversations often enough that I wanted to write this up.